What do we value most in life? Health is an obvious choice, next to love and meaningful friendships. Money is certainly on the list too, but controversial, at least when pursued narrowly at the cost of everything else (though few would dispute that a good life depends on owning a sufficient amount of it).

Uncontroversial is the value of another item: knowledge. Our post-industrial society increasingly depends on the creation and transfer of know-how and technical expertise. We usually consider knowledgeable people as intelligent, which often correlates with higher social status and financial reward.

But what is knowledge? It is surprisingly hard to define. Does memorization of a lot of facts (e.g historical dates) count as knowledge? Then your internet-connected computer would be the most knowledgeable entity ever created. Facts are data points. Taken by themselves, they have limited value if we can’t explain their existence and are unable to predict future facts. In other words, we lack understanding.

Furthermore, the value of knowledge depends on its usefulness to solve a problem. In particular, to solve my problem. Ask “How much do you know about X?” and I would reply “to accomplish what?” (which is nicer than an engineer’s favorite retort of “it depends…”). I know that a combustion engine needs oil and gas to function and have a dim idea about gears. Enough knowledge for me to drive a car, understand the meaning of warning lights and abstain from shifting into reverse while the car is moving forward. If you ask me, I know all there is about cars. However, if tomorrow I wanted to start a new career as an auto mechanic, I would immediately find out that I know very little.

Figuring out the purpose of knowledge affects how we think about it and fundamentally changes how we teach and learn. By asking about the reason for knowledge we move from passive consumption of facts to an active inquiry about the why, how, how well, and so on.

How purpose and knowledge relate to each is the subject of the fascinating book called “Knowledge as Design” by D.N. Perkins.

As the title suggests, the author considers knowledge not something that is out there, given to be consumed without critical analysis (he calls this “truth mongering”) but something that has a purpose, or, in a broader sense, a design.

The first thing to note is that the word design may be misleading. If you, like me, haven’t thought much about design before you may equate it with style (fashion, brands, websites) or a blueprint to build something (design of a house). The author takes a wider look and defines design more accurately:

Design is the human endeavor of shaping objects to purposes

Almost everything is a design. A knife is an object adapted to the purpose of cutting things. Academic knowledge is also designed - Newton’s laws are a mathematical tool to explain the motion of bodies. So are processes, claims, and even historic dates as a part of a larger story (for example, the year 1492 marks a milestone in western civilization). There are only a few things that are not designs (like natural phenomena).

So how does thinking about design help us with acquiring knowledge? Knowing the purpose of a design is crucial, but purpose alone only explains the reason a design exists, it does not say anything about how it solves a problem.

The four design questions

The author gives us a tool to examine designs by formulating four design questions that make up the heart of the book.

The questions to ask about a design are the following:

- What is its purpose?

- What is its structure?

- What are the model cases of it?

- What are the arguments for and against its usefulness?

“Purpose” we have already discussed. The structure of a design deals with the parts, components, materials, relations, and so forth of the object in question. A knife has a cutting edge attached to a handle.

Model cases are examples of the object or knowledge (imagine photos of different types of knives). Model cases are in some sense mental models, but in a much narrower way (I come back to this later).

Arguments, the last of the four questions, evaluate a design. How well does it do its job? Given the purpose, is the design well-thought-out or could it be better? While the former three questions could be answered without much context, here we depend on our subjective understanding of the object at hand. I can’t say anything about the effectiveness of a design if I don’t understand what it does or how it differs from other solutions.

The book discusses at length how the four questions can be applied to practically everything. It turns out that looking at the world through design glasses is tremendously useful. Be it how to write an essay, how to come up with new insights, or how to improve learning and teaching in general.

Since knowledge plays such an important role in our life, it is worth looking at how this new way of thinking about knowledge (as design) compares to our default way (as information).

| Knowledge as information |

Knowledge as design |

| passive |

active |

| something to store and retrieve |

something to apply |

| out there to memorize |

result of human inquiry |

| no purpose |

born out of purpose |

| impersonal |

personal |

We shift from the left to the right, from the passive to the active, by connecting what we learn to each of the four design questions.

So how does all this help us with our mental models? Is one better than the other?

How do software mental models (SMM) compare with the four design questions (4DQ)?

The short answer is that 4DQs are a tool to evaluate the subject at hand that leads to a conclusion, while SMMs are a process that keeps evolving. There is overlap between the two but also significant differences. Let’s have a look at each of the four questions.

Model cases

We start with likely the most confusing part. Aren’t mental models the same as model cases? Not really. Mental models, at least the way I use them, are internal representations of reality, that can differ significantly from the actual object. Model cases are examples (of object types, procedures, formulas, etc), whereas mental models are simplifications that are (hopefully) so useful that they can explain complex processes with a few elegant analogies. Coming back to the simple example of a knife, model cases would present all the different types of knives (we could, for example, present their evolution from the stone age until today), whereas the mental model of a knife depends on what goal I have. In my case, I mostly think about knives in the context of a kitchen, so I have mental models about how the sharp side of a blade works and what knife to use for certain materials (e.g. bread versus meat). A chef has vastly more complex internal representations of knives. She might think about angles, handles, all kinds of materials, cutting techniques, and so on.

Structure

The structure of a design describes the elements and parts of the object or knowledge. Mental models include structure, too, but only those parts that are useful for achieving the goal. When learning something new, the structure of a model will be similar to a structure of a design. The more advanced the models get, the more abstract they become until they may have nothing in common anymore with the actual object.

Purpose

The purpose of a design is similar to what I consider the goal of a mental model. However, in the case of SMMs, the goal is deeply personal, whereas the purpose of a design is meant to be independent of the learner.

The goal of a mental model gives clarity about what is important and what I can leave out. The goal evolves. In a design, the purpose is fixed - the reason the design exist stems from the purpose.

In reality, the purpose of a design is still to some degree subjective, because it depends on the knowledge of the learner. A physics beginner in school will come up with a different, simpler purpose for Newton’s laws than a university graduate.

Arguments

Arguably, arguments are the most important of the four questions because they require the learner to conclude based entirely on their understanding and experience. Purpose, structure, and model cases lay the foundation that arguments are built upon.

SMMs don’t have arguments at all. If they are so important, how can this be?

The reason can be found in the fact that SMMs are a process. Arguments and purpose are two sides of a coin. The argument describes how well a design accomplishes its purpose with the outcome of an evaluation. SMMs don’t have a final step. Instead, we evaluate models during the test phase, where we figure out where the models are inaccurate. Instead of giving a judgment, we correct the model and make it better during the refinement phase (or replace it with a better model if necessary). The argument is ingrained in the process.

In summary, we could say that SMMs are the agile version of 4DQ.

| Knowledge as design |

Software Mental Models |

| Purpose |

Goal of the mental model is personal and depends on the learner’s experience and aim. |

| Structure |

The structure of SMMs may be similar to the real object but advanced models may not reflect reality at all. |

| Model cases |

SMMs go beyond mere examples of designs. They can include model cases but don’t need to. |

| Arguments |

The evaluation of a mental model happens during the test & refinement phase, always adapted to the goal of the learner. |

The 4DQs are a very useful tool to structure thinking, learning, and writing. The book can be repetitive at times as it goes through the many possible applications of the theory, but once the main ideas are understood it is easy to skip content and focus on the parts that interest you. They are certainly useful for developing SMMs.

Can you spot the 4DQ in this post?

Writing an essay is among the use cases of the theory. Can you spot where in this post I have used the four questions?

Answer:

- The first half of this post explains the purpose and structure of the 4DQs. First I made claims about the importance of knowledge, and in particular, the value of looking at the purpose of knowledge

- Then I described the structure of the 4DQs, describing each question.

- I finished the first half by completing my description of the purpose of the 4DQ by demonstrating the value of moving from seeing knowledge as information to considering knowledge as design.

- I use model cases of 4DQs throughout the post, most of the time using the example of a knife. And, to go fully recursive, this answer is another model case of the 4DQs.

- In the second half I give arguments by comparing in detail the 4DQs with SMMs, with the conclusion that while there’s overlap between the two ideas, SMMs fundamentally differ from 4DQs by being an ongoing process instead of a final evaluation of an object or piece of knowledge.

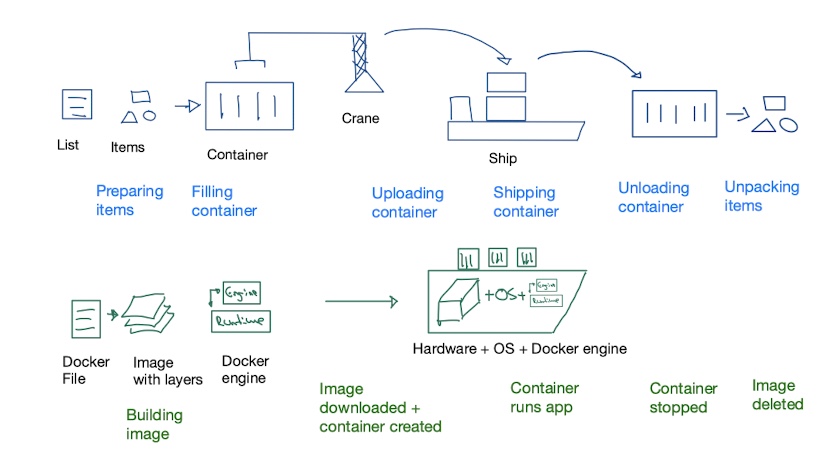

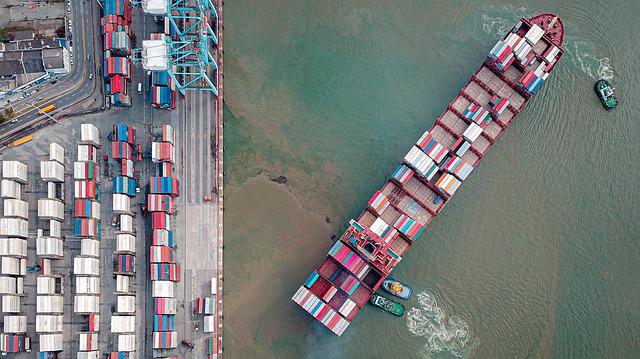

Containers and shipping software

Containers and shipping software